Uploading Files To Data Lake Store With PowerShell Part One

Hello!

I’ve recently been working on uploading files to Azure Data Lake Store. It’s quite straightforward and I think a decent introduction into automating a deployment with Azure, as well as a good example of writing scripts that are idempotent, so I’m going to go through them from beginning to end. I’m going to go into one function per day, so this will take 5 days to cover. But I’m hoping that by focusing a bit more in-depth as opposed to trying to cram it all into one post it will be more informative, and both yourselves and me won’t fade out towards the end!

The fully complete script, that could easily be turned into a module if you wish, is available on gist.github.com and is featured at the bottom of this post.

To follow through at home, you will need a subscription to Azure, and an account with access to subscription that is able to alter objects on the subscription (obvious this may be.)

Slightly less obvious is just how familiar you have to be with Data Lake Store to have a crack at the demos: to be clear, you don’t need to have an understanding of what Azure Data Lake Store is as that’s not what we’re covering here. There’s lots of documentation available via the link above. It’s all very interesting, go and have a read.

Finally, you will need something like Visual Studio Code to edit and execute the scripts, as well as Azure PowerShell installed.

If you’re completely unfamiliar with PowerShell scripts that contain Functions, be advised that the functions need to be declared before they can be used. This should explain why all the function are at the top, and the actualy execution of them is at the bottom.

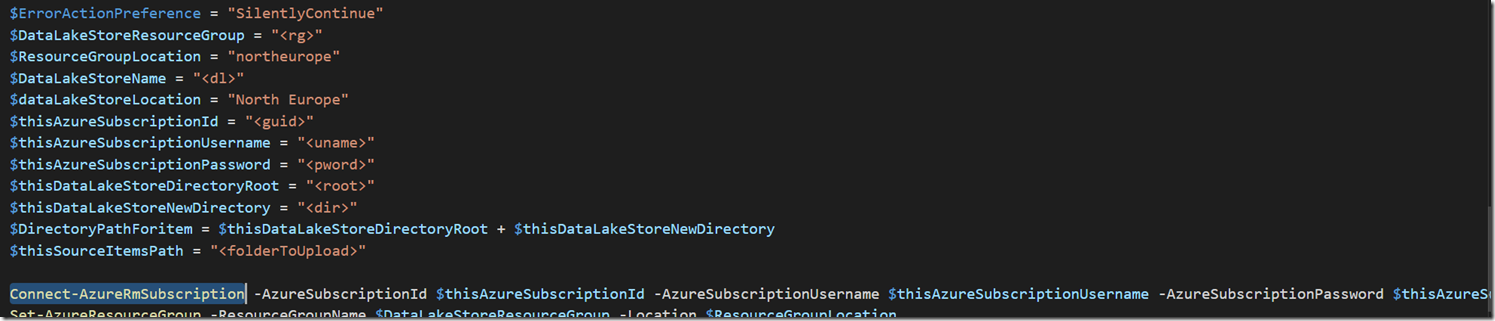

Scroll down to the very bottom and you will see some variables. You need to fill these in so that they are relevant to you. You need to be aware that Data Lake Store Account names need to be in all lowercase, numeric, no spaces only.

The first function we’re executing is “Connect-AzureRmSubscription”. As the approved verb implies, this is going to establish and authenticate against a subscription to connect to a Resource Manager. This will be where we create our Data Lake Store.

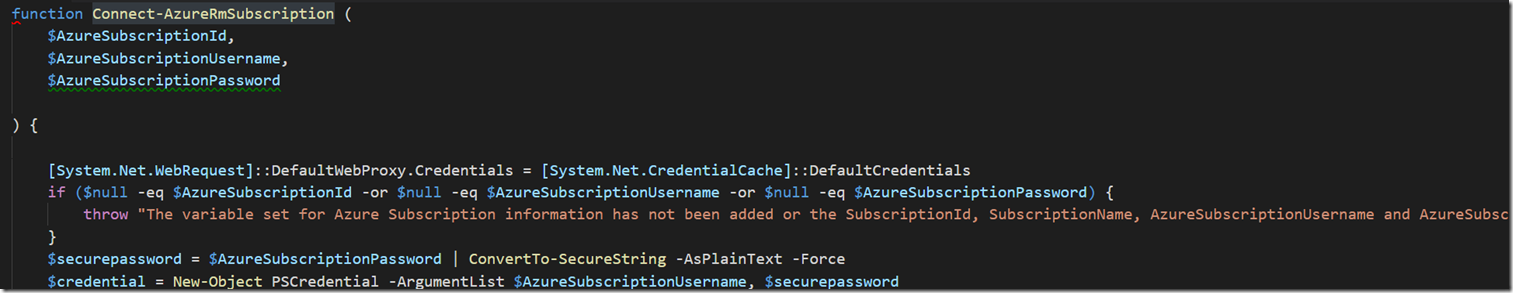

This function checks that none of the params are empty, and if not creates a PSVariable so that our password is not in clear text. Obviously in this demo we are passing in a clear text password, but from TeamCity/Octopus/VSTS Release etc, you can configure the variable to be a password and so obfuscate the value.

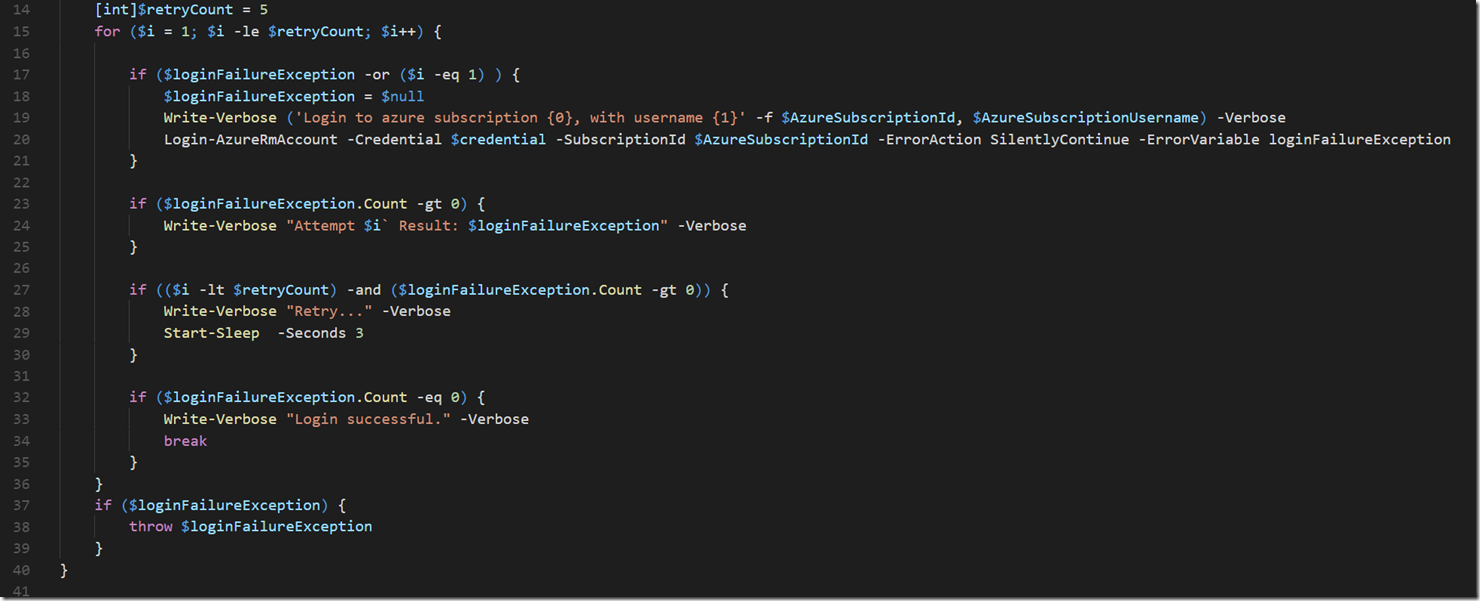

And because Azure is a little…. temperamental when it comes to connections, we have a retry for attempting to authenticate. If by any chance the Login has thrown an exception after the fifth attempt then something is definitely wrong and an exception is thrown. But once we’re logged in we are logged in for the entire PowerShell session, and so we are able to run the other commands without having to authenticate, or execute them against a connection object each and every time.

OK, so we’ve got a connection to the subscription, and next time we will pick up here and go to the next level, which is about Resource Managers.

As promised, here is the full script.